kubeasz

本文最后更新于:2023年12月5日 晚上

https://github.com/easzlab/kubeasz

https://github.com/easzlab/kubeasz/blob/master/docs/setup/00-planning_and_overall_intro.md

| 角色 | ip | 主机名 | 备注 |

|---|---|---|---|

| master1 | 10.0.1.1/21 | k8s-master1.ljk.cn | 同时部署 ansible |

| master2 | 10.0.1.2/21 | k8s-master2.ljk.cn | |

| node1 | 10.0.1.3/21 | k8s-node1.ljk.cn | |

| node2 | 10.0.1.4/21 | k8s-node2.ljk.cn | |

| etcd1 | 10.0.2.1/21 | k8s-etcd1.ljk.cn | |

| etcd2 | 10.0.2.2/21 | k8s-etcd2.ljk.cn | |

| etcd3 | 10.0.2.3/21 | k8s-etcd3.ljk.cn | |

| HAProxy1 + keepalived 给 master 做负载均衡 |

10.0.2.1/21 vip:10.0.2.188:8443 |

和 etcd1 混用 | |

| HAProxy2 + keepalived 给 master 做负载均衡 |

10.0.2.2/2 vip:10.0.2.188:8443 |

和 etcd2 混用 | |

| harbor | 10.0.2.3/21 | 和 etcd3 混用 |

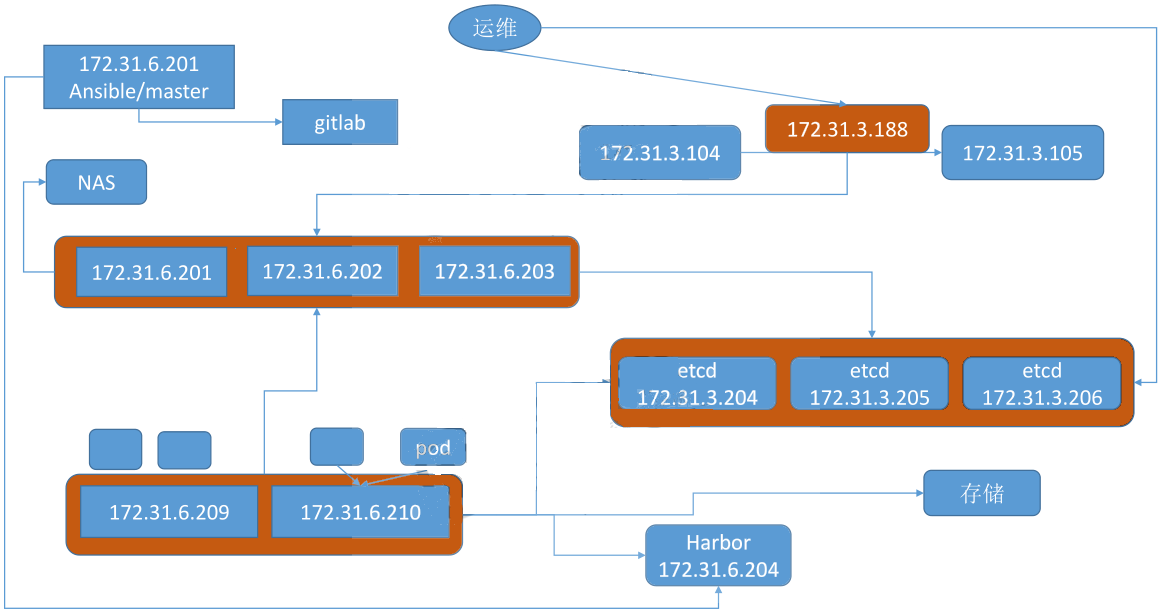

架构图:

准备

校准时区和同步时间

所有服务器,校准时区

timedatectl set-timezone Asia/Shanghai确保各节点时区设置一致、时间同步。 如果你的环境没有提供 NTP 时间同步,推荐集成安装 chrony

[root@k8s-master1 ~]$apt install -y chrony [root@k8s-master1 ~]$sed -n '/^#/!p' /etc/chrony/chrony.conf server ntp.aliyun.com iburst server time1.cloud.tencent.com iburst keyfile /etc/chrony/chrony.keys driftfile /var/lib/chrony/chrony.drift logdir /var/log/chrony maxupdateskew 100.0 rtcsync makestep 1 3 allow 10.0.0.0/16 allow 10.0.1.0/16 allow 10.0.2.0/16 allow 10.0.3.0/16 allow 10.0.4.0/16 allow 10.0.5.0/16 allow 10.0.6.0/16 allow 10.0.7.0/16 local stratum 10 [root@k8s-master1 ~]$systemctl restart chronyd.service # 其他节点 $apt install -y chrony $vim /etc/chrony/chrony.conf ... server 10.0.1.1 iburst # 只修改此行 ... $systemctl restart chronyd.service

python

kubeasz 基于 ansible,而 ansible 基于 python 的,所以所有服务器都需要安装 python 环境

apt update && apt install python3 –yansible

在 master1 上安装 ansible

[root@k8s-master1 ~]$apt-get install git python3-pip -y

# 优化pip

[root@k8s-master1 ~]$vim ~/.pip/pip.conf # 没有就创建

[global]

index-url = https://mirrors.aliyun.com/pypi/simple/

[install]

trusted-host=mirrors.aliyun.com

[root@k8s-master1 ~]$pip3 install ansible # 这一步时间很长,建议更改为阿里的pip源设置 ssh 免密

function ssh_push_key() {

ips=(

10.0.1.2

10.0.1.3

10.0.1.4

10.0.2.1

10.0.2.2

10.0.2.3

)

[ "$1" ] && ips=($@)

apt install -y sshpass

[ -f ~/.ssh/id_rsa ] || ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' >/dev/null 2>&1

export SSHPASS=ljkk

for ip in ${ips[@]}; do

(

timeout 5 ssh $ip echo "$ip: SSH has passwordless access!"

if [ $? != 0 ]; then

sshpass -e ssh-copy-id -o StrictHostKeyChecking=no root@$ip >/dev/null 2>&1

timeout 5 ssh $ip echo "$ip: SSH has passwordless access!" || echo "$ip: SSH has no passwordless access!"

fi

) &

done

wait

}

ssh_push_keykeepalived

10.0.2.1/21 和 10.0.2.2/21 均安装 keepalived

# 安装

$apt install keepalived -y

$vim /etc/keepalived/keepalived.conf

global_defs {

router_id keepalived1

vrrp_skip_check_adv_addr

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.0.18

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 1 # 另一个keepalived设置为2

priority 100 # 另一个keepalived设置为120

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.2.188 dev eth0 label eth0:0

}

}

$systemctl restart keepalived.servicehaproxy

10.0.2.1/21 和 10.0.2.2/21 均安装 haproxy

# 编译安装lua,过程略...

# 编译安装haproxy

$wget http://www.haproxy.org/download/2.2/src/haproxy-2.2.6.tar.gz

$apt install make gcc build-essential libssl-dev zlib1g-dev libpcre3 libpcre3-dev libsystemd-dev libreadline-dev -y

$useradd -r -s /sbin/nologin -d /var/lib/haproxy haproxy

$mkdir /usr/local/haproxy

$tar zxvf haproxy-2.2.6.tar.gz

$cd haproxy-2.2.6/

$make ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_LUA=1 LUA_INC=/usr/local/lua/src/ LUA_LIB=/usr/local/lua/src/

$make install PREFIX=/usr/local/haproxy

$vim /etc/profile # export PATH=/usr/local/haproxy/sbin:$PATH

$. /etc/profile

$haproxy -v

$haproxy -V

$haproxy -vv

# 配置文件

$mkdir /var/lib/haproxy

$mkdir /etc/haproxy

$vim /etc/haproxy/haproxy.cfg

global

chroot /usr/local/haproxy

stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin

user haproxy

group haproxy

daemon

nbproc 1

maxconn 100000

pidfile /var/lib/haproxy/haproxy.pid

defaults

option redispatch

option abortonclose

option http-keep-alive

option forwardfor

maxconn 100000

mode http

timeout connect 120s

timeout server 120s

timeout client 120s

timeout check 5s

listen stats

stats enable

mode http

bind 0.0.0.0:9999

log global

stats uri /haproxy-status

stats auth admin:123456

listen k8s-api-8443

bind 10.0.2.188:8443 # 注意,要和hosts的[ex-lb]对应起来

mode tcp

server master1 10.0.1.1:6443 check inter 2000 fall 3 rise 5 # 6443端口是默认的,不能改

server master2 10.0.1.2:6443 check inter 2000 fall 3 rise 5

# 启动文件

$mkdir /etc/haproxy/conf.d/

$vim /lib/systemd/system/haproxy.service

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg -f /etc/haproxy/conf.d/ -c -q

ExecStart=/usr/local/haproxy/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -f /etc/haproxy/conf.d/ -p /var/lib/haproxy/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

$systemctl start haproxy.service下载项目源码

$export release=2.2.4

$curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/easzup

$chmod +x ./easzup

# 使用工具脚本下载

$./easzup -D配置集群参数

cd /etc/ansible

cp example/hosts.multi-node hosts# 'etcd' cluster should have odd member(s) (1,3,5,...)

# variable 'NODE_NAME' is the distinct name of a member in 'etcd' cluster

[etcd]

10.0.2.1 NODE_NAME=etcd1

10.0.2.2 NODE_NAME=etcd2

10.0.2.3 NODE_NAME=etcd3

# master node(s)

[kube-master]

10.0.1.1

10.0.1.2

# work node(s)

[kube-node]

10.0.1.3

#10.0.1.4

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'yes' to install a harbor server; 'no' to integrate with existed one

# 'SELF_SIGNED_CERT': 'no' you need put files of certificates named harbor.pem and harbor-key.pem in directory 'down'

[harbor]

#192.168.1.8 HARBOR_DOMAIN="harbor.yourdomain.com" NEW_INSTALL=no SELF_SIGNED_CERT=yes

# [optional] loadbalance for accessing k8s from outside

[ex-lb]

10.0.2.1 LB_ROLE=backup EX_APISERVER_VIP=10.0.2.188 EX_APISERVER_PORT=8443

10.0.2.2 LB_ROLE=master EX_APISERVER_VIP=10.0.2.188 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="192.168.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.10.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-60000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="ljk.local."

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/ansible"验证配置是否成功:

$ansible all -m ping安装

配置 harbor

10.0.2.3,详细步骤参考:docker 仓库管理

因为笔记本性能问题,harbor 和 etcd 复用一个节点,所以 harbor 的 hostname 配置为 ip,如果 harbor 单独使用一台服务器,应该配置为 hostname

00-规划集群和配置介绍

https://github.com/easzlab/kubeasz/blob/master/docs/setup/00-planning_and_overall_intro.md

01-创建证书和安装准备

https://github.com/easzlab/kubeasz/blob/master/docs/setup/01-CA_and_prerequisite.md

[root@k8s-master1 ansible]$pwd

/etc/ansible

[root@k8s-master1 ansible]$ansible-playbook ./01.prepare.yml02-安装 etcd 集群

https://github.com/easzlab/kubeasz/blob/master/docs/setup/02-install_etcd.md

[root@k8s-master1 ansible]$ansible-playbook ./02.etcd.yml03-安装 docker 服务

https://github.com/easzlab/kubeasz/blob/master/docs/setup/03-install_docker.md

[root@k8s-master1 ansible]$ansible-playbook 03.docker.yml04-安装 master 节点

https://github.com/easzlab/kubeasz/blob/master/docs/setup/04-install_kube_master.md

[root@k8s-master1 ansible]$ansible-playbook 04.kube-master.yml# 报错:目标主机没有/opt/kube目录

TASK [kube-master : 配置admin用户rbac权限] ****************************************************************************************

fatal: [10.0.1.2]: FAILED! => {"changed": false, "checksum": "95ce2ef4052ea55b6326ce0fe9f6da49319874c7", "msg": "Destination directory /opt/kube does not exist"}

changed: [10.0.1.1]

# 解决:去目标主机手动创建/opt/kube目录

[root@k8s-master2 ~]$mkdir /opt/kube

# 重新执行ansible-playbook

[root@k8s-master1 ansible]$ansible-playbook 04.kube-master.yml05-安装 node 节点

https://github.com/easzlab/kubeasz/blob/master/docs/setup/05-install_kube_node.md

[root@k8s-master1 ansible]$ansible-playbook 05.kube-node.yml06-安装集群网络

https://github.com/easzlab/kubeasz/blob/master/docs/setup/06-install_network_plugin.md

公有云推荐使用 flannel,自建 IDC 推荐使用 calico,如果选择 calico,一定要关闭 IPIP

[root@k8s-master1 ansible]$vim roles/calico/defaults/main.yml

CALICO_IPV4POOL_IPIP: "off" # 将always修改为off,关闭IPIP

[root@k8s-master1 ansible]$ansible-playbook 06.network.yml07-安装集群插件

https://github.com/easzlab/kubeasz/blob/master/docs/setup/07-install_cluster_addon.md

安装 dashboard

安装部署:

[root@k8s-master1 ansible]$cd manifests/dashboard/

[root@k8s-master1 dashboard]$pwd

/etc/ansible/manifests/dashboard

# 部署dashboard 主yaml配置文件

[root@k8s-master1 dashboard]$kubectl apply -f ./kubernetes-dashboard.yaml

# 创建可读可写 admin Service Account

[root@k8s-master1 dashboard]$kubectl apply -f ./admin-user-sa-rbac.yaml

# 创建只读 read Service Account

[root@k8s-master1 dashboard]$kubectl apply -f ./read-user-sa-rbac.yaml验证:

# 查看pod 运行状态

[root@k8s-master1 dashboard]$kubectl get pod -n kube-system | grep dashboard

dashboard-metrics-scraper-79c5968bdc-cqg8b 1/1 Running 0 60m

kubernetes-dashboard-c4c6566d6-crqfc 1/1 Running 0 60m

# 查看dashboard service

[root@k8s-master1 dashboard]$kubectl get service -n kube-system|grep dashboard

dashboard-metrics-scraper ClusterIP 192.168.233.194 <none> 8000/TCP 60m

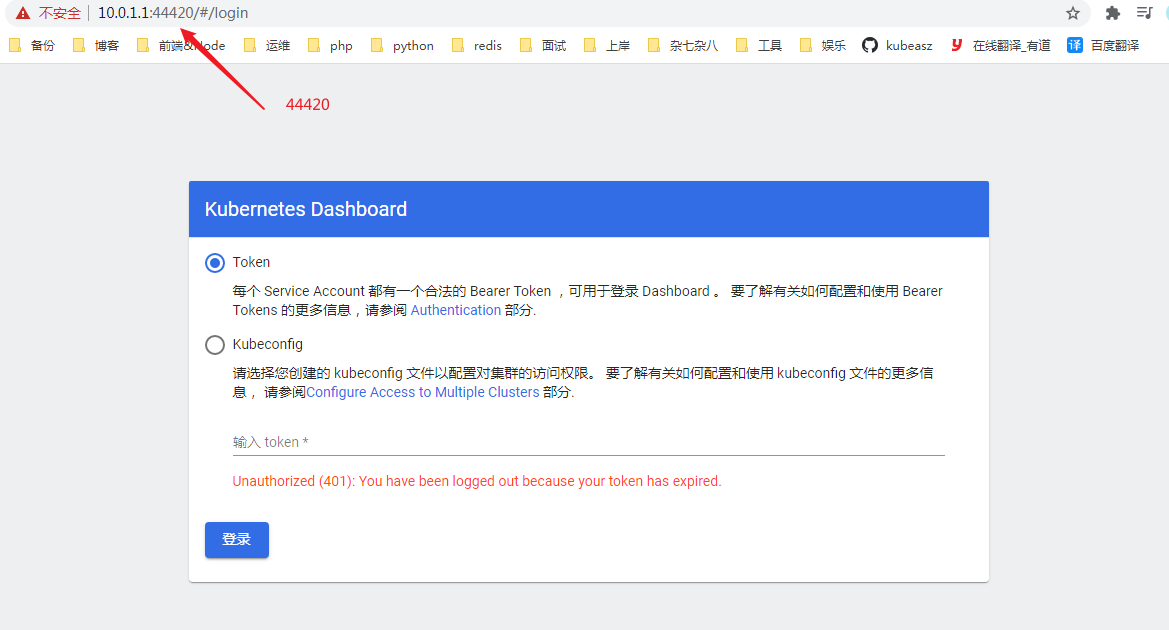

kubernetes-dashboard NodePort 192.168.249.138 <none> 443:44420/TCP 60m # 44420

# 查看集群服务

$kubectl cluster-info|grep dashboard

# 查看pod 运行日志

$kubectl logs kubernetes-dashboard-c4c6566d6-crqfc -n kube-system访问:

登录:

token:令牌

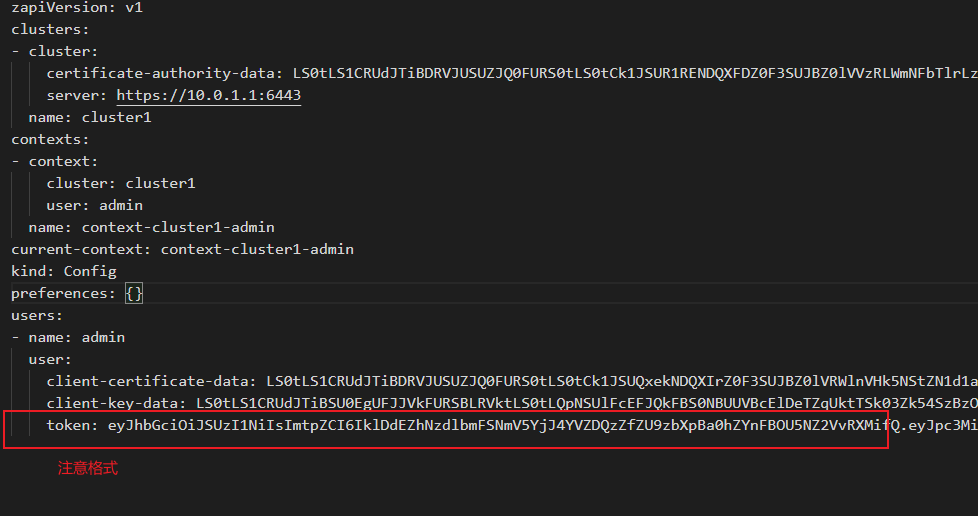

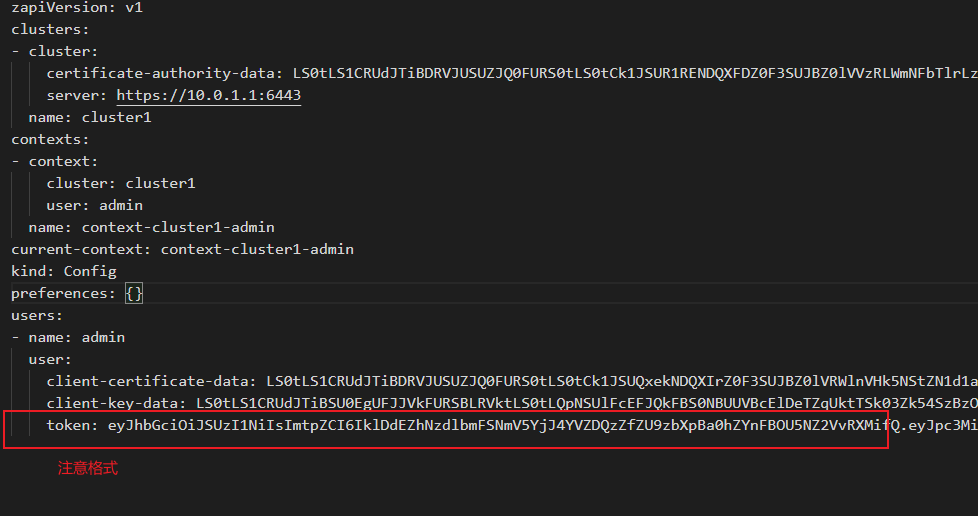

# 查看用户 admin-user 的 token $kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') # 查看用户 read-user 的 token $kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep read-user | awk '{print $1}')kubeconfig:配置文件

将 token 追加到 /root/.kube/config 文件中,然后将此文件作为登录的凭证

测试网络

# 安装几个测试pod

# 注意node上的/etc/docker/daemon.json 要配置"insecure-registries": ["10.0.2.3"]

kubectl run net-test1 --image=10.0.2.3/baseimages/alpine:3.13 sleep 360000

kubectl run net-test2 --image=10.0.2.3/baseimages/alpine:3.13 sleep 360000

kubectl run net-test3 --image=10.0.2.3/baseimages/alpine:3.13 sleep 360000

# 查看pod,确保跑在不同的node上,这样才能测试网络

$kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 7m11s 10.10.210.4 10.0.1.4 <none> <none>

net-test2 1/1 Running 0 2m27s 10.10.210.5 10.0.1.4 <none> <none>

net-test3 1/1 Running 0 2m6s 10.10.246.66 10.0.1.3 <none> <none>

# 测试:进入pod,ping其他node的pod的ip,确保能ping通安装 CoreDNS

# 进入到pod中,无法ping通域名,这时因为没有配置dns

$ping kubernetes.default.svc.ljk.local

ping: bad address 'kubernetes.default.svc.ljk.local'自行安装

coredns 官方 github 提供的安装脚本,依赖 kube-dns,所以我们使用 kubernetes 官方提供的 yaml 进行安装

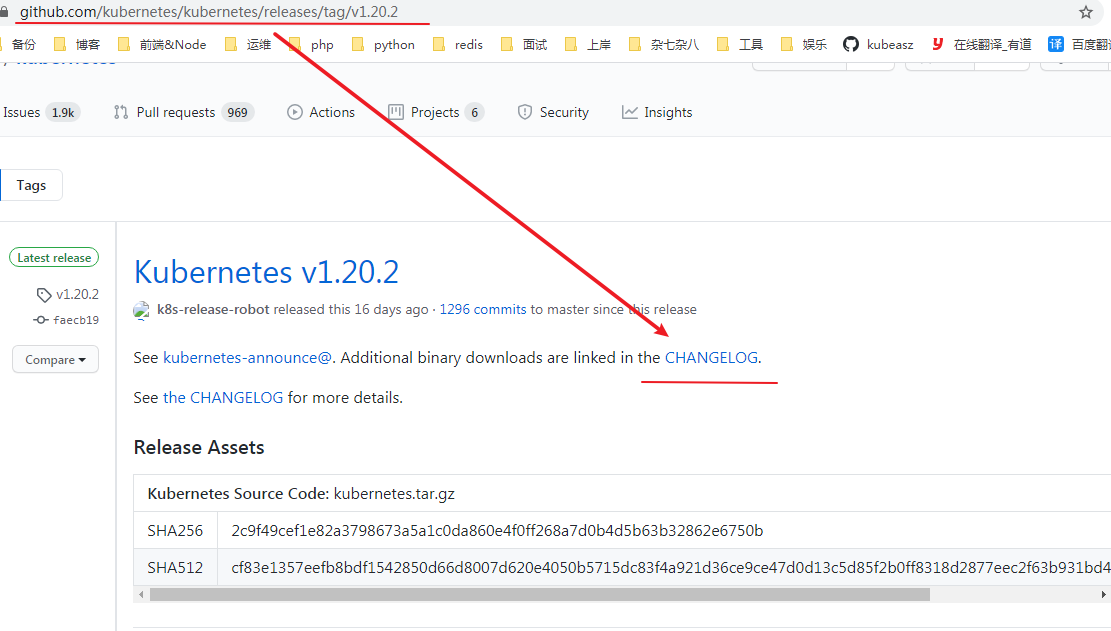

去 kubernetes 的官方 github 仓库,下载二进制文件

打开后,往下拉,下载 client、node、server 三个源码包,注意要下载 amd64 版本,不要下载错了

https://dl.k8s.io/v1.20.2/kubernetes.tar.gz

https://dl.k8s.io/v1.20.2/kubernetes-client-linux-amd64.tar.gz

https://dl.k8s.io/v1.20.2/kubernetes-node-linux-amd64.tar.gz

https://dl.k8s.io/v1.20.2/kubernetes-server-linux-amd64.tar.gz[root@k8s-master1 src]$tar zxf kubernetes.tar.gz

[root@k8s-master1 src]$tar zxf kubernetes-client-linux-amd64.tar.gz

[root@k8s-master1 src]$tar zxf kubernetes-node-linux-amd64.tar.gz

[root@k8s-master1 src]$tar zxf kubernetes-server-linux-amd64.tar.gz

[root@k8s-master1 src]$cd kubernetes/

[root@k8s-master1 kubernetes]$ll

total 33672

drwxr-xr-x 10 root root 4096 Jan 13 21:44 ./

drwxr-xr-x 3 root root 4096 Jan 29 19:38 ../

drwxr-xr-x 2 root root 4096 Jan 13 21:44 addons/

drwxr-xr-x 3 root root 4096 Jan 13 21:40 client/

drwxr-xr-x 9 root root 4096 Jan 13 21:46 cluster/

drwxr-xr-x 2 root root 4096 Jan 13 21:46 docs/

drwxr-xr-x 3 root root 4096 Jan 13 21:46 hack/

-rw-r--r-- 1 root root 34426961 Jan 13 21:44 kubernetes-src.tar.gz

drwxr-xr-x 3 root root 4096 Jan 13 21:44 LICENSES/

drwxr-xr-x 3 root root 4096 Jan 13 21:41 node/

-rw-r--r-- 1 root root 3386 Jan 13 21:46 README.md

drwxr-xr-x 3 root root 4096 Jan 13 21:41 server/

-rw-r--r-- 1 root root 8 Jan 13 21:46 version

[root@k8s-master1 kubernetes]$ll server/bin/ # 这些都是go写的二进制程序,可以直接执行的

...

[root@k8s-master1 kubernetes]$cd ./cluster/addons/dns/coredns/

[root@k8s-master1 coredns]$ll

total 44

drwxr-xr-x 2 root root 4096 Jan 29 21:39 ./

drwxr-xr-x 5 root root 4096 Jan 13 21:46 ../

-rw-r--r-- 1 root root 4957 Jan 13 21:46 coredns.yaml.base

-rw-r--r-- 1 root root 5007 Jan 13 21:46 coredns.yaml.in # 基于coredns.yaml.base

-rw-r--r-- 1 root root 5009 Jan 13 21:46 coredns.yaml.sed # 基于coredns.yaml.base

-rw-r--r-- 1 root root 1075 Jan 13 21:46 Makefile

-rw-r--r-- 1 root root 344 Jan 13 21:46 transforms2salt.sed

-rw-r--r-- 1 root root 287 Jan 13 21:46 transforms2sed.sed

[root@k8s-master1 coredns]$mkdir -p /etc/ansible/manifests/dns/coredns # 规划一下目录

[root@k8s-master1 coredns]$cp coredns.yaml.base /etc/ansible/manifests/dns/coredns/coredns.yaml

[root@k8s-master1 coredns]$cd /etc/ansible/manifests/dns/coredns/

[root@k8s-master1 coredns]$ls

coredns.yaml

[root@k8s-master1 coredns]$vim coredns.yaml

# 修改以下几行

image: 10.0.2.3/baseimages/coredns:1.7.0 # 将镜像换为本地harbor地址

resources:

limits:

memory: 512Mi # 分配内存,生产中要给的大一些

kubernetes ljk.local in-addr.arpa ip6.arpa { # 设置域名 ljk.local

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

clusterIP: 192.168.0.2 # 设置dns服务器,这个可以进入pod查看/etc/resolv.conf的nameserver

[root@k8s-master1 coredns]$kubectl apply -f coredns.yaml

[root@k8s-master1 coredns]$kubectl get pod -n kube-system | grep coredns

coredns-7589f7f68b-jp5kz 1/1 Running 0 39m# 查看 server ip

$kubectl get service -o wide -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 29h <none>

kube-system dashboard-metrics-scraper ClusterIP 192.168.233.194 <none> 8000/TCP 6h27m k8s-app=dashboard-metrics-scraper

kube-system kube-dns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 47m k8s-app=kube-dns

kube-system kubernetes-dashboard NodePort 192.168.249.138 <none> 443:44420/TCP 6h27m k8s-app=kubernetes-dashboard通过 dashboard 进入 pod,测试一下:

# 服务名称.命名空间.svc.ljk.local

/ # nslookup kubernetes.default.svc.ljk.local

Server: 192.168.0.2

Address: 192.168.0.2#53

Name: kubernetes.default.svc.ljk.local

Address: 192.168.0.1 # 测试成功

/ # nslookup dashboard-metrics-scraper.kube-system.svc.ljk.local

Server: 192.168.0.2

Address: 192.168.0.2#53

Name: dashboard-metrics-scraper.kube-system.svc.ljk.local

Address: 192.168.233.194kubeasz 安装

[root@k8s-master ansible]$vim roles/cluster-addon/defaults/main.yml

# 关闭其他组件的自动安装,只安装coredns

metricsserver_install: "no"

dashboard_install: "no"

ingress_install: "no"

[root@k8s-master ansible]$ansible-playbook ./07.cluster-addon.yml

...

# 测试,随便进入一个pod

/ # nslookup kubernetes.default.svc.ljk.local

Server: 192.168.0.2

Address: 192.168.0.2:53

Name: kubernetes.default.svc.ljk.local

Address: 192.168.0.1关于负载均衡

kubeasz 的逻辑是在每个 node 上安装一个 haproxy,如果 master 的数量超过 1 个,node 和 master 的通信就走 haproxy

[root@k8s-node1 kubernetes]$cat ~/.kube/config | grep server

server: https://127.0.0.1:6443

[root@k8s-node1 kubernetes]$cat /etc/kubernetes/kube-proxy.kubeconfig | grep server

server: https://127.0.0.1:6443

[root@k8s-node1 kubernetes]$cat /etc/kubernetes/kubelet.kubeconfig | grep server

server: https://127.0.0.1:6443可是这样无法避免 haproxy 的单点失败问题,而且外部访问 master 的时候还得走 vip,所以不如直接只搭建一套 keeplived + haproxy,然后不管 master 还是 node,所有和 apiserver 的通信都走 vip,kubeasz 提供了搭建 keeplived + haproxy 的 playbook,在 01.prepare.yml 中打开注释即可,当然也可以自己搭建

使用

添加节点

非常简单

添加 master

https://github.com/easzlab/kubeasz/blob/master/docs/op/op-master.md

easzctl add-master $ip添加 node

https://github.com/easzlab/kubeasz/blob/master/docs/op/op-node.md

easzctl add-node $ip集群升级

小版本升级

下载最新版本的 kubernetes,全部解压后,在 kubernetes/server/bin 目录下,都是编译好的二进制程序

kubernetes-client-linux-amd64.tar.gz

kubernetes-node-linux-amd64.tar.gz

kubernetes-server-linux-amd64.tar.gz

kubernetes.tar.gz

# 将以上四个都解压,得到一个目录:kubernetes

$pwd

/usr/local/src/kubernetes/server/bin

$ll

total 986844

drwxr-xr-x 2 root root 4096 Feb 19 00:26 ./

drwxr-xr-x 3 root root 4096 Feb 19 00:30 ../

-rwxr-xr-x 1 root root 46710784 Feb 19 00:26 apiextensions-apiserver*

-rwxr-xr-x 1 root root 39251968 Feb 19 00:26 kubeadm*

-rwxr-xr-x 1 root root 44699648 Feb 19 00:26 kube-aggregator*

-rwxr-xr-x 1 root root 118218752 Feb 19 00:26 kube-apiserver*

-rw-r--r-- 1 root root 8 Feb 19 00:24 kube-apiserver.docker_tag

-rw------- 1 root root 123035136 Feb 19 00:24 kube-apiserver.tar

-rwxr-xr-x 1 root root 112758784 Feb 19 00:26 kube-controller-manager*

-rw-r--r-- 1 root root 8 Feb 19 00:24 kube-controller-manager.docker_tag

-rw------- 1 root root 117575168 Feb 19 00:24 kube-controller-manager.tar

-rwxr-xr-x 1 root root 40263680 Feb 19 00:26 kubectl*

-rwxr-xr-x 1 root root 114113512 Feb 19 00:26 kubelet*

-rwxr-xr-x 1 root root 39518208 Feb 19 00:26 kube-proxy*

-rw-r--r-- 1 root root 8 Feb 19 00:24 kube-proxy.docker_tag

-rw------- 1 root root 120411648 Feb 19 00:24 kube-proxy.tar

-rwxr-xr-x 1 root root 43745280 Feb 19 00:26 kube-scheduler*

-rw-r--r-- 1 root root 8 Feb 19 00:24 kube-scheduler.docker_tag

-rw------- 1 root root 48561664 Feb 19 00:24 kube-scheduler.tar

-rwxr-xr-x 1 root root 1634304 Feb 19 00:26 mounter*master

关闭服务

$systemctl stop \ kube-apiserver.service \ kubelet.service \ kube-scheduler.service \ kube-controller-manager.service \ kube-proxy.service拷贝新版本,覆盖旧版本

scp kube-apiserver kubelet kube-scheduler kube-controller-manager kube-proxy kubectl 10.0.1.31:/usr/local/bin启动服务

$systemctl start \ kube-apiserver.service \ kubelet.service \ kube-scheduler.service \ kube-controller-manager.service \ kube-proxy.service

注:因为 master 做了 haproxy+keepalived,所以只要不是全部 master 同时关闭服务就没问题,其实都全部 master 都关闭服务,短时间也没事

node

关闭服务

$systemctl stop kubelet.service kube-proxy.service拷贝新版本,覆盖旧版本

scp kubelet kube-proxy kubectl 10.0.1.33:/usr/local/bin启动服务

$systemctl start kubelet.service kube-proxy.service

注:关闭 kubelet 和 kube-proxy 不影响访问已经创建的 Pod

如果 node 数量比较多,写个脚本循环升级即可